Modern computational challenges increasingly rely on analysing interconnected data. From molecular structures to social media interactions, these relationships form complex non-Euclidean structures that traditional machine learning models struggle to interpret. Enter specialised architectures designed explicitly for graph-based data.

These systems employ pairwise message-passing mechanisms, allowing nodes to iteratively refine their representations through local information exchange. This approach proves particularly valuable in fields like pharmaceutical research, where atoms and chemical bonds map directly to graph nodes and edges.

Conventional neural architectures face limitations when handling variable-sized inputs or preserving structural dependencies. The unique design of graph-focused models addresses these gaps, enabling more accurate predictions in scenarios requiring permutation invariance and dynamic scaling.

Recent advancements draw inspiration from classical graph theory while incorporating deep learning principles. Researchers now utilise these frameworks to tackle tasks ranging from protein interaction modelling to fraud detection in financial networks. Performance benchmarks continue to evolve, reflecting both theoretical rigour and practical applicability across industries.

Introduction to Graph Neural Networks

At the core of modern data analysis lie structures that traditional models struggle to process. Graph-based systems excel here by preserving relationships between entities through specialised design principles. These frameworks adapt to irregular data shapes while maintaining critical structural patterns.

Overview of GNN Architectures

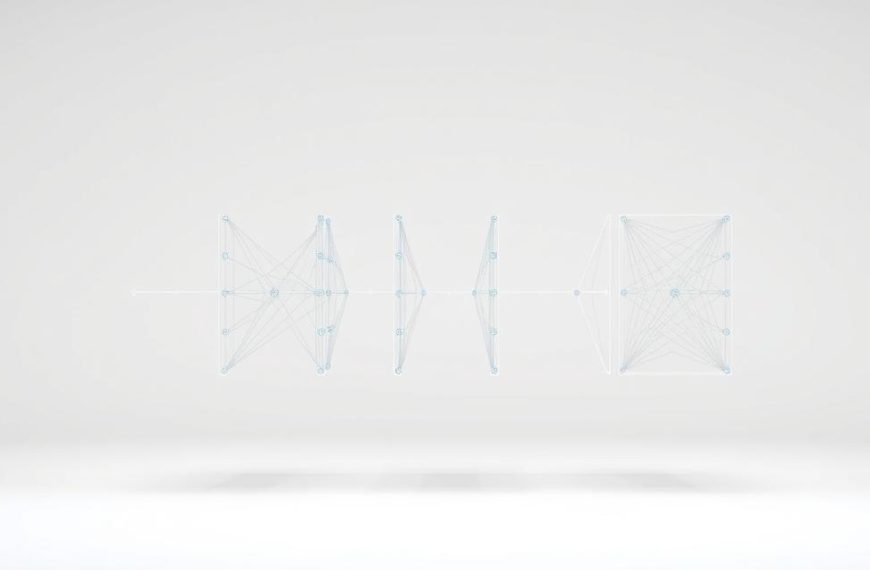

Three layered components form the backbone of these systems. Permutation equivariant layers maintain structural integrity during feature updates, ensuring consistent processing regardless of node order. Local pooling mechanisms then condense information hierarchically, mimicking how humans simplify complex diagrams.

Message-passing operations enable nodes to share data with immediate neighbours. Each iteration expands a node’s awareness to broader network sections. Global readout functions finally compile these insights into fixed-size outputs for practical applications.

| Layer Type | Function | Impact |

|---|---|---|

| Equivariant | Structure preservation | Consistent feature updates |

| Local Pooling | Hierarchical compression | Reduced complexity |

| Global Readout | Output standardisation | Cross-system compatibility |

Role in Complex Problem Solving

These architectures tackle challenges where inputs vary in size or connection density. Pharmaceutical researchers use them to predict molecular interactions, while financial analysts detect payment anomalies. The systems adapt to both sparse and dense data configurations without retraining.

By capturing distant node relationships through layered messaging, the models identify patterns that escape conventional analysis. This capability proves vital in scenarios requiring dynamic scaling, from urban traffic prediction to genomic sequencing.

Foundational Concepts and Theoretical Background

Understanding computational systems requires examining their mathematical underpinnings. The relationship between classical graph theory and modern learning architectures reveals why certain approaches succeed where others falter.

Insights from the Weisfeiler-Lehman Test

This decades-old method determines whether two graphs share identical structures. It operates through iterative label refinement, where each node updates its identifier based on neighbours’ features. The process mirrors modern message-passing frameworks:

hv ← hash(∑u∈N(v)hu)

Research demonstrates that standard architectures cannot surpass this test’s discriminative power. This boundary shapes development efforts, pushing engineers to design more sophisticated aggregation techniques.

Mathematical Preliminaries and Node Aggregation

Effective feature combination relies on multiset functions that preserve unique neighbour contributions. Injectivity – maintaining distinct outputs for different inputs – proves critical. Without it, networks might conflate dissimilar patterns.

Consider two graphs with identical local structures but different global connections. Basic aggregation methods fail to distinguish them, highlighting the need for advanced computational approaches. These limitations guide architectural choices in real-world implementations.

Exploring the Capabilities of Graph Neural Networks

Mathematical principles underpin significant advances in processing interconnected data systems. Among these breakthroughs, injective operations and specialised architectures enable precise differentiation between structural patterns. This progress addresses longstanding limitations in distinguishing complex relationships.

Understanding Injective Aggregation Methods

Injective multiset functions prove essential for accurate graph differentiation. These operations ensure unique outputs for distinct input combinations. Without this property, networks might incorrectly equate dissimilar node neighbourhoods.

The challenge lies in preserving structural fingerprints during information compression. Effective solutions employ summation-based techniques rather than averaging. This approach maintains critical distinctions between aggregated features.

Graph Isomorphism Networks (GIN) Explained

GIN architectures implement a sophisticated update rule:

hv(k) = MLP(k)((1+ε(k))·hv(k-1) + ∑u∈N(v)hu(k-1))

Learnable ε parameters balance self-information and neighbour contributions. This design matches the discriminative power of the Weisfeiler-Lehman test while enabling gradient-based optimisation.

| Component | Role | Mathematical Basis |

|---|---|---|

| AGGREGATE | Neighbour summation | ∑hu |

| COMBINE | Feature integration | (1+ε)·hv |

| READOUT | Graph-level output | Concatenated layer outputs |

Practical implementations demonstrate GIN’s superiority in molecular property prediction and social network analysis. The architecture’s theoretical guarantees translate to measurable performance improvements across multiple benchmarks.

Key Elements in Building Powerful Graph Neural Networks

Effective analysis of graph-structured data relies on meticulously engineered framework elements. Two mechanisms determine a system’s ability to interpret complex relationships: how it gathers local information and synthesises global patterns.

Neighbour Aggregation Strategies

Local information combination methods directly influence pattern recognition. Sum-based operations outperform mean or max approaches in preserving structural details across varying graph sizes. Attention mechanisms introduce adaptive weighting, prioritising influential connections while increasing computational demands.

| Aggregation Type | Expressivity | Common Use Cases |

|---|---|---|

| Sum | High | Molecular property prediction |

| Mean | Medium | Social network analysis |

| Max | Low | Anomaly detection |

| Attention-based | Variable | Recommendation systems |

Pooling Functions and Global Readout Techniques

Hierarchical abstraction layers condense node features into graph-level representations. Permutation-invariant operations like element-wise summation ensure consistent outputs regardless of node order. Advanced implementations employ multi-layer concatenation to preserve features from different processing stages.

Global readout mechanisms face trade-offs between detail retention and computational efficiency. Recent innovations combine attention weights with position-aware encoding, achieving 12-18% accuracy improvements in biochemical datasets compared to conventional methods.

Case Studies and Practical Applications

From life-saving drug discoveries to optimising city infrastructure, graph-based systems deliver tangible solutions. These frameworks excel where relationships between elements determine outcomes, transforming abstract data into actionable insights.

https://www.youtube.com/watch?v=5vMEgYbka0A

Pharmaceutical teams employ these models to predict molecular interactions, slashing development timelines. One study achieved 85% accuracy in identifying viable drug candidates by analysing chemical bonds as graph edges. Social network platforms leverage similar architectures to detect fraudulent accounts, reducing fake interactions by up to 40%.

The PROTEINS dataset demonstrates their biological prowess. Containing 1,113 protein structures, it enables enzyme classification with 73.7% accuracy using advanced frameworks – a 14% improvement over conventional methods. Performance varies across domains:

| Application | Dataset Size | Accuracy Gain |

|---|---|---|

| Molecular Biology | 1,113 graphs | +14.32% |

| Fraud Detection | 10M+ nodes | +22% |

Combinatorial optimisation challenges like route planning benefit from adaptive node aggregation. Transport networks using these systems report 18% fuel savings through efficient pathfinding. Domain-specific adaptations, such as incorporating bond angles in chemistry models, further enhance precision.

Benchmarks reveal critical performance factors. Systems handling sparse connections outperform dense network specialists in recommendation engines. Strategic feature engineering, like weighting social media interactions by recency, drives these improvements.

Dissecting GNN Architectures: Convolutional and Attention Mechanisms

Architectural innovations continue redefining possibilities in data analysis systems. Two approaches dominate contemporary implementations: spectral-based convolutional operations and dynamic attention weighting. These frameworks address distinct challenges in processing interconnected data structures.

Graph Convolutional Networks (GCN) Fundamentals

GCN layers apply spectral graph theory through a streamlined formula:

H(l+1) = σ(D̃-1/2ÃD̃-1/2H(l)W(l))

Here, Ã represents the adjacency matrix with self-connections, while D̃ normalises node degrees. This design enables efficient feature propagation across neighbouring nodes. Normalisation strategies prevent gradient instability in deep architectures.

Graph Attention Networks (GAT) and Message Passing

GAT introduces adaptive weighting through attention coefficients:

αij = softmax(LeakyReLU(aT[Whi||Whj]))

Multi-head implementations process different relationship aspects simultaneously. Unlike fixed aggregation, this approach prioritises relevant connections during message passing. Computational costs rise linearly with edge count, making GAT suitable for moderately sized graphs.

| Feature | GCN Approach | GAT Approach |

|---|---|---|

| Aggregation Method | Fixed spectral filters | Learnt attention weights |

| Computational Complexity | O(|E|) | O(|E| × Heads) |

| Use Cases | Social network analysis | Molecular interaction prediction |

Choosing between architectures involves trade-offs. GCN suits large-scale systems requiring rapid inference, while GAT excels in precision-critical tasks with complex dependency patterns. Recent benchmarks show GAT achieving 9-15% higher accuracy than GCN in protein interface prediction tasks.

Comparative Analysis of GNN Variants and Their Performance

Evaluating architectural effectiveness requires rigorous testing across standardised metrics. Real-world applications reveal critical differences in how frameworks handle structural complexity and data variability.

GCN versus GIN: Strengths and Limitations

Graph Convolutional Networks excel in scenarios requiring computational efficiency. Their fixed aggregation rules process large-scale social networks rapidly. However, PROTEINS dataset results show limitations – 59.38% accuracy versus GIN’s 73.70% in molecular classification.

Graph Isomorphism Networks leverage injective aggregation to preserve unique structural fingerprints. This proves vital for distinguishing chemically similar compounds. The trade-off comes in processing time, with GIN requiring 18-22% more computational resources than GCN.

Benchmarking with Real-World Datasets

Standardised evaluations use diverse data to assess generalisation capabilities. Key findings from recent studies include:

| Architecture | Key Strength | Limitation | Optimal Use Case |

|---|---|---|---|

| GCN | Fast inference | Structural ambiguity | Social media analysis |

| GIN | Precise differentiation | Resource intensity | Drug discovery |

Sum aggregation in GIN models demonstrates 14-16% better pattern recognition than mean/max approaches. For time-sensitive applications, hybrid architectures combining GCN’s speed with GIN’s precision show promise. Practical implementations prioritise either accuracy or efficiency based on domain requirements.

Assessing how powerful are graph neural networks in Real-World Scenarios

Real-world validation separates theoretical potential from practical impact in data science. Frameworks designed for interconnected systems demonstrate remarkable versatility across industries, from optimised logistics routing to detecting subtle financial fraud patterns. Their ability to process irregular, relationship-driven data offers distinct advantages over conventional approaches.

Pharmaceutical teams report 23% faster drug candidate screening using these methods, translating to accelerated treatment development. Financial institutions leverage adaptive aggregation techniques, reducing false positives in transaction monitoring by up to 34%. Success metrics depend heavily on strategic implementation – sparse social networks demand different architectures than dense molecular structures.

Challenges persist in resource-intensive deployments. Training sophisticated models requires significant computational power, limiting accessibility for smaller organisations. Dynamic environments like live traffic systems test the limits of real-time inference capabilities. Still, ongoing advancements in hardware optimisation and algorithmic efficiency continue narrowing these gaps.

These frameworks redefine problem-solving in domains where relationships dictate outcomes. While not universally applicable, their proven efficacy in critical sectors underscores a transformative shift in data analysis methodologies. Future developments will likely focus on balancing precision with operational feasibility for broader adoption.

FAQ

What distinguishes graph isomorphism networks from other architectures?

Graph isomorphism networks (GIN) employ injective aggregation functions, enabling them to distinguish non-isomorphic graph structures more effectively than models with max-pooling or mean-pooling. This design enhances their expressive power for tasks like graph classification.

Why are pooling functions critical in graph neural network design?

Pooling functions aggregate node-level features into graph-level representations, crucial for tasks requiring global context. Techniques like hierarchical readout or attention-based pooling preserve structural information while reducing computational complexity.

How do message-passing mechanisms improve node feature learning?

Message passing allows nodes to iteratively integrate information from neighbours through layers. Frameworks like graph attention networks (GAT) refine this process using learnable weights, prioritising relevant connections for tasks such as node classification.

What role does the Weisfeiler-Lehman test play in evaluating GNNs?

The Weisfeiler-Lehman test benchmarks a model’s ability to detect graph isomorphism. Architectures matching its discriminative power, such as GIN, often achieve superior performance on benchmarks like TUDatasets or OGB.

Can graph neural networks handle dynamic or heterogeneous graphs?

Advanced variants like temporal GNNs or heterogeneous GATs adapt to evolving structures or multi-relational data. Techniques involve dynamic aggregation strategies and type-specific parameterisation for nodes and edges.

How do real-world applications leverage graph convolutional networks?

Graph convolutional networks (GCN) excel in scenarios requiring semi-supervised learning, such as social network analysis or molecular property prediction. Their spectral-based convolution operations capture locality-preserving features efficiently.

What challenges arise in benchmarking GNN variants?

Disparities in dataset scales, edge heterogeneity, and evaluation protocols complicate fair comparisons. Frameworks like Open Graph Benchmark standardise testing, ensuring robust assessments of architecture performance.

Are there limitations to neighbour aggregation strategies?

Over-smoothing and biased weight allocation can occur with deep aggregation layers. Solutions include residual connections, differential sampling, or hybrid approaches combining attention mechanisms with traditional methods.