Modern technology thrives on precision, yet confusion persists around three critical concepts: artificial intelligence, machine learning, and deep learning. These terms form a hierarchy, each building upon the last. Artificial intelligence (AI) serves as the overarching framework, encompassing systems designed to mimic human cognitive functions.

Within this framework, machine learning emerges as a core discipline. It focuses on algorithms that improve automatically through exposure to data. Unlike traditional programming, these models adapt without explicit instructions, identifying patterns to complete tasks ranging from fraud detection to customer segmentation.

Deep learning represents a specialised branch of machine learning. Using layered neural networks, it processes vast datasets to uncover intricate relationships. This approach reduces reliance on manual feature engineering, enabling systems to learn directly from raw inputs like images or speech.

Business leaders navigating technological investments must grasp these distinctions. While all three fields share goals of automation and intelligence replication, their applications differ markedly. Machine learning drives recommendation engines, while deep learning powers facial recognition systems. Understanding these nuances ensures informed decisions about resource allocation and implementation strategies.

Understanding Artificial Intelligence

The digital transformation era prioritises systems that mimic human reasoning while handling complex tasks. At its core, artificial intelligence (AI) combines multiple scientific disciplines to create adaptive solutions for challenges once reserved for human intelligence.

Definition and Broad Scope

AI refers to machines designed to replicate cognitive functions like problem-solving and pattern recognition. Unlike static programming, these systems evolve through exposure to data.

“True innovation lies in creating tools that augment, rather than replace, human capabilities,”

notes a leading UK tech strategist.

Types and Real-World Applications

Three AI categories dominate discussions:

- Narrow AI: Excels at single tasks (e.g., chess engines)

- General AI: Theoretical multi-domain reasoning

- Superintelligent AI: Hypothetical self-improving systems

Practical implementations span industries. Healthcare uses AI for analysing medical scans, while financial institutions deploy it to detect fraudulent transactions. Retailers leverage predictive algorithms to personalise shopping experiences across Britain.

This multidisciplinary field draws from psychology, computer science, and engineering. Current innovations focus on enhancing decision-making processes in sectors like urban planning and renewable energy management.

Exploring Machine Learning Models

Advanced algorithms now drive decision-making processes across British industries, adapting through exposure to information rather than rigid programming. These systems categorise into three primary approaches, each addressing distinct business needs through specialised data handling techniques.

Structured Learning Approaches

Supervised learning dominates scenarios with labelled historical records. Financial institutions employ decision trees for credit scoring, while healthcare providers use regression models to predict patient outcomes. This method requires precise training data to establish reliable input-output relationships.

Unsupervised learning thrives where patterns remain hidden. Retailers apply clustering algorithms to group customers by purchasing behaviour, enabling targeted marketing strategies. Energy companies use these models to detect anomalies in power grid data across UK regions.

Reinforcement learning excels in dynamic environments. Autonomous vehicle developers leverage this trial-based approach, rewarding navigation systems for safe manoeuvres. Gaming companies implement similar frameworks to create adaptive opponents in strategy titles.

Commercial Implementation Strategies

Practical applications demonstrate machine learning’s versatility:

| Model Type | Data Requirements | Common Algorithms | Business Use Cases |

|---|---|---|---|

| Supervised | Labelled historical data | Linear regression, Naive Bayes | Fraud detection, Sales forecasting |

| Unsupervised | Raw transactional data | K-means, Hierarchical clustering | Market basket analysis, Network security |

| Reinforcement | Simulated environments | Q-learning, Deep Q-networks | Robotics, Inventory optimisation |

Manufacturing firms combine these techniques for predictive maintenance, analysing sensor data to anticipate equipment failures. E-commerce platforms blend supervised and unsupervised methods to personalise recommendations while identifying emerging shopping trends.

Dissecting Deep Learning: Neural Networks and Beyond

Cutting-edge technological frameworks now harness layered computational models to interpret complex patterns. These systems eliminate manual intervention through self-optimising architectures that mirror biological cognition processes.

Architectural Foundations and Neural Network Designs

Deep learning structures information through interconnected nodes arranged in input, hidden, and output layers. Multi-layered designs enable automatic feature extraction – a breakthrough contrasting traditional machine learning methods. Five principal architectures dominate industrial applications:

- Feedforward networks: Power basic pattern recognition

- Recurrent structures: Process sequential data like speech

- Convolutional models: Excel in image analysis

- LSTM frameworks: Manage time-dependent variables

- GAN systems: Generate synthetic media content

Autonomous vehicles employ convolutional neural networks to identify road signs, while financial institutions use recurrent models for fraud pattern detection.

Advantages, Challenges and Data Requirements

These systems thrive on unstructured data – analysing MRI scans or voice recordings without manual preprocessing. A UK-based AI researcher observes:

“The hierarchy within deep architectures allows machines to develop abstract representations, much like our human brain processes information.”

However, training demands substantial computational resources. Effective model development typically requires:

- Thousands of labelled examples

- GPU-accelerated processing

- Iterative optimisation cycles

Despite these hurdles, NHS collaborations have demonstrated 94% accuracy in tumour detection using deep learning techniques. Ongoing innovations aim to reduce energy consumption while maintaining predictive precision across British healthcare and manufacturing sectors.

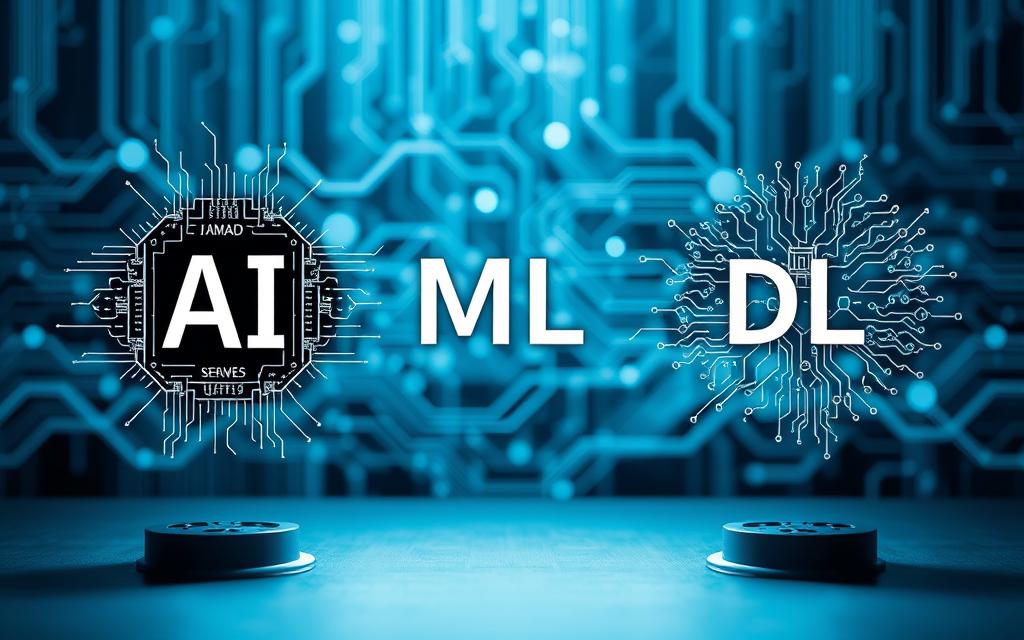

what is difference between ai machine learning and deep learning

Technological innovation progresses through layered specialisations, creating distinct tiers of capability. Understanding these relationships proves vital for selecting appropriate solutions across UK industries.

Key Differentiators and Hierarchical Relationships

Three core elements define this technological pyramid:

- Broad frameworks: Foundational systems handling decision-making tasks

- Data-driven adaptation: Algorithms improving through pattern recognition

- Advanced architectures: Self-optimising neural networks

Financial institutions illustrate this hierarchy effectively. Basic fraud detection uses rule-based artificial intelligence, while credit scoring employs machine learning models. Complex risk modelling increasingly relies on deep learning’s multi-layered analysis.

Complexity, Training Time and Feature Engineering

Implementation demands vary significantly across these technologies:

| Technology | Data Volume | Hardware Needs | Feature Handling |

|---|---|---|---|

| Rule-based Systems | Minimal | Standard CPUs | Manual programming |

| Machine Learning | Structured datasets | Cloud servers | Engineered features |

| Deep Learning | Raw unstructured data | GPU clusters | Automatic extraction |

British healthcare research demonstrates these contrasts. Traditional diagnostic tools require carefully curated patient records, whereas modern imaging analysis uses unprocessed scan data through learning models. As a Cambridge-based data scientist notes:

“The shift from manual feature engineering to automated pattern discovery represents our most significant efficiency leap in medical AI.”

Practical Applications and Use Cases

From urban transport networks to healthcare diagnostics, intelligent systems are reshaping British industries through targeted implementations. These technologies demonstrate their value by solving specific challenges while creating measurable efficiencies.

Autonomous Vehicles, Image Recognition and More

Self-driving cars combine multiple technologies, using learning deep architectures for real-time object detection. Lidar systems process 1.3 million data points per second, while machine learning models optimise routes based on traffic patterns. A Transport for London spokesperson notes:

“Our trials show autonomous vehicles reduce peak-hour congestion by 17% through predictive lane management.”

Image recognition use cases span from retail to medicine. High-street chains employ computer vision for inventory tracking, analysing shelf layouts with 98% accuracy. NHS partnerships use convolutional networks to detect early-stage tumours in X-rays, cutting diagnosis times by 40%.

Natural language processing algorithms power 73% of UK customer service chatbots. These systems handle 12,000 queries hourly while improving response accuracy through reinforcement learning. Financial institutions deploy similar frameworks to analyse mortgage documents, extracting key terms in 0.8 seconds.

| Application | Technology | Key Benefit |

|---|---|---|

| Fraud Detection | Machine Learning | Reduces false positives by 62% |

| Quality Control | Deep Learning | Identifies 0.1mm product defects |

| Demand Forecasting | ML Algorithms | Improves stock accuracy by 34% |

Streaming platforms like BBC iPlayer demonstrate machine learning model effectiveness. Their recommendation engines analyse viewing habits to suggest content, increasing user engagement by 29%. As these use cases proliferate, businesses gain unprecedented tools for operational refinement.

Evolving Trends in AI, ML, and Deep Learning

The landscape of intelligent systems is undergoing rapid transformation, driven by breakthroughs that redefine processing capabilities. Andrew Ng’s analogy holds true:

“Deep learning models act as engines, while data serves as their fuel”

Recent Advancements and Innovations

Transformer architectures now dominate natural language processing. These models generate human-like text while analysing linguistic patterns in legal documents or medical records. Edge computing deployments have surged, with 68% of UK manufacturers processing data locally to reduce cloud dependency.

Automated machine learning platforms enable marketers to build custom algorithms without coding expertise. Retail giants like Tesco use these tools to predict inventory needs, cutting waste by 23% through smarter training of prediction systems.

Future Prospects and Emerging Technologies

Quantum machine learning experiments at Cambridge promise breakthroughs in drug discovery. Federated learning frameworks allow NHS trusts to collaborate on diagnostic models without sharing sensitive patient data.

Integration with IoT devices creates self-optimising factories. Siemens’ Newcastle plant uses vibration sensors feeding new data into neural networks, predicting machinery faults 14 hours earlier than traditional methods. These innovations signal a shift towards machines that learn continuously from environmental inputs.

Comparative Analysis of Techniques

Organisations face critical decisions when selecting analytical tools for operational challenges. Performance metrics reveal stark contrasts between approaches, directly impacting implementation costs and strategic outcomes.

Accuracy, Interpretability and Hardware Requirements

Deep learning dominates complex pattern recognition, achieving 92% accuracy in image classification trials. These systems require unstructured data like raw sensor inputs or social media feeds. A UK tech analyst observes:

“Interpretability remains the Achilles’ heel of neural networks – you’re essentially trusting a mathematical oracle.”

Traditional machine learning models prove more transparent. Logistic regression and decision trees allow engineers to trace how input variables affect predictions. This clarity comes at a cost – structured datasets with clear features become mandatory.

| Metric | Machine Learning | Deep Learning |

|---|---|---|

| Data Type | Structured tables | Raw images/text |

| Training Time | 2-8 hours | 3-21 days |

| Hardware | Cloud servers | GPU clusters |

Implementation costs vary dramatically. Basic algorithms run on £800 workstations, while neural network training demands £15,000 GPU arrays. NHS trials show learning models for patient triage reduce diagnostic errors by 34%, despite higher initial investments.

Business leaders must weigh these factors against operational needs. Retail inventory systems often favour interpretable models, while autonomous vehicle developers prioritise accuracy over transparency.

Implementing AI Solutions in UK Businesses

Over 80% of UK technology leaders report improved operational efficiency through strategic AI adoption. Successful integration hinges on aligning machine learning models with sector-specific challenges while maintaining ethical data practices.

Best Practices and Sector-specific Use Cases

Customer service teams achieve 45% faster resolution times using algorithms that analyse speech patterns. Retailers like Brewdog employ these tools for personalised campaigns, boosting email open rates by 33%.

Cybersecurity firms deploy artificial intelligence to detect threats 18% faster than traditional methods. The NHS processes 12,000 daily scans using diagnostic models, reducing radiologists’ workload by 26%.

Supply chain specialists apply predictive analytics to cut warehouse costs. Unilever’s AI-driven platform optimises stock levels across 700 UK stores, minimising waste. For deeper insights into these distinctions, explore sector-specific implementation frameworks.

Key considerations include:

- Prioritising interpretable algorithms for regulated industries

- Allocating 15-20% budgets for ongoing model training

- Implementing edge computing for real-time data processing

FAQ

How do artificial intelligence concepts differ from machine learning implementations?

Artificial intelligence encompasses systems mimicking human intelligence, while machine learning focuses on algorithms improving through data exposure. Deep learning represents a specialised subset using neural networks to parse complex patterns autonomously.

Which industries in Britain prioritise neural networks for advanced analytics?

UK healthcare (e.g., NHS diagnostic tools), fintech firms like Revolut for fraud detection, and Cambridge-based DeepMind employ neural networks for drug discovery, risk modelling, and predictive maintenance solutions.

What distinguishes supervised learning models from unsupervised approaches?

Supervised models require labelled training data with predefined outcomes, ideal for spam filtering. Unsupervised techniques identify hidden patterns in unlabelled data, commonly used by retailers like Tesco for customer segmentation strategies.

Why do convolutional neural networks dominate image recognition tasks?

These architectures automatically detect hierarchical features through layered processing, enabling systems like London-based Babylon Health’s skin cancer screening tools to achieve diagnostic accuracy rivalling human specialists.

How does reinforcement learning power autonomous vehicle development?

Companies like Waymo and UK-based Oxbotica use reward-based algorithms enabling self-driving cars to make real-time decisions through simulated environments, balancing safety protocols with dynamic navigation challenges.

What hardware advancements support deep learning adoption in British enterprises?

GPU clusters from NVIDIA and cloud-based TPUs through Google Cloud Platform enable firms like AstraZeneca to accelerate drug discovery processes while managing computational costs through scalable infrastructure.

Which sectors show highest ROI from predictive maintenance machine learning models?

Rolls-Royce’s aircraft engine monitoring systems and National Grid’s smart meter networks demonstrate how sensor data analysis prevents equipment failures, reducing downtime by 18-25% across UK manufacturing and energy sectors.

How do UK data protection laws impact training data collection for AI systems?

The Data Protection Act 2018 mandates anonymisation techniques and purpose limitation, requiring firms like DeepMind Health to implement federated learning models that analyse medical records without transferring sensitive patient data.